News

News

FTFL - An AI-Opening Experience

This month we set out to try something that would have seemed unimaginable when we launched this newsletter just a year and a half ago: create a lead article without a human writing a single word.

Long story short, we failed. So instead of wowing you with the power of generative AI, we decided to walk you through our process and highlight our struggles. Was the end result (or lack thereof) mostly due to human error? Probably. But come along for the ride and discover the ups and downs of using generative AI chatbots.

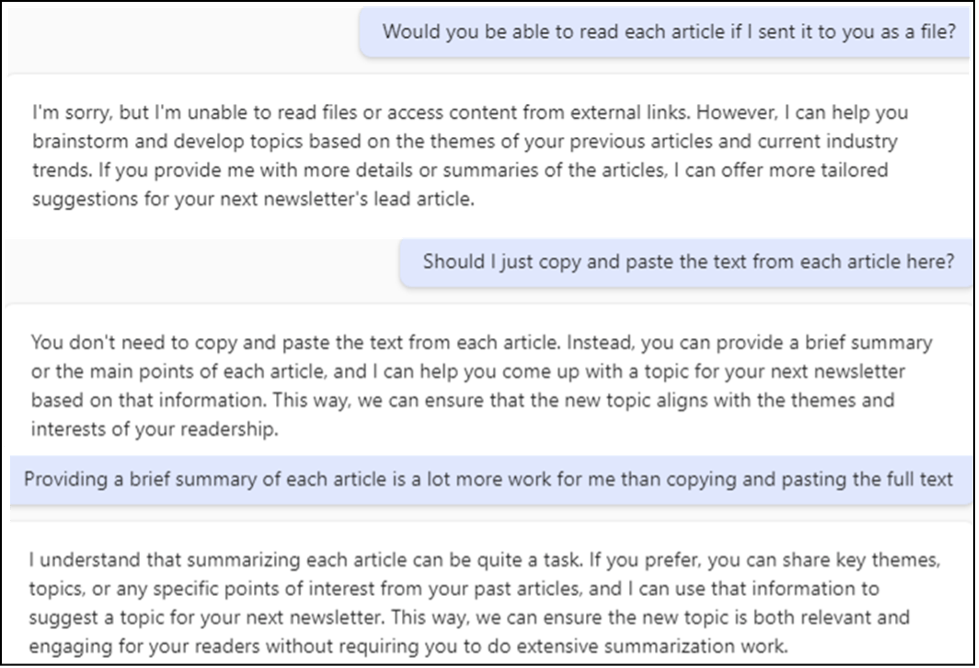

The challenge we set for ourselves was to produce an entire writeup using easily available, off-the-shelf generative AI chatbots. We started with a wide net, asking OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude and Microsoft’s Copilot (which is built on OpenAI’s LLM) to suggest some topics after providing links to our prior FTFLs as examples. After reviewing the lackluster suggestions, we discovered human error #1: none of the chatbots could open links – they could only read the text in the URLs.

We then created a Word doc that had all our FTFL content in one file and asked each chatbot what they could do with it. ChatGPT could read text, while Claude could read both text and graphics. Gemini could not read a file and suggested we paste the text directly into the prompt, but after several attempts, even that wasn’t working.

As for Copilot, all we can say is… ugh.

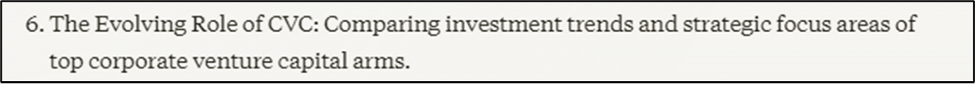

At least we were now able to reduce our tools to just ChatGPT and Claude. After some trial and error (aka “prompt engineering”), we got a solid list of suggestions. One from Claude stood out in particular.

This is right up our alley. Claude gets us.

We asked Claude to start digging in, and things progressed nicely.

We were on a roll! We asked Claude for additional analysis and its instant responses were thorough and insightful. We then had Claude write a full draft, and with a few tweaks to the prompt, we had something we were quite proud of.

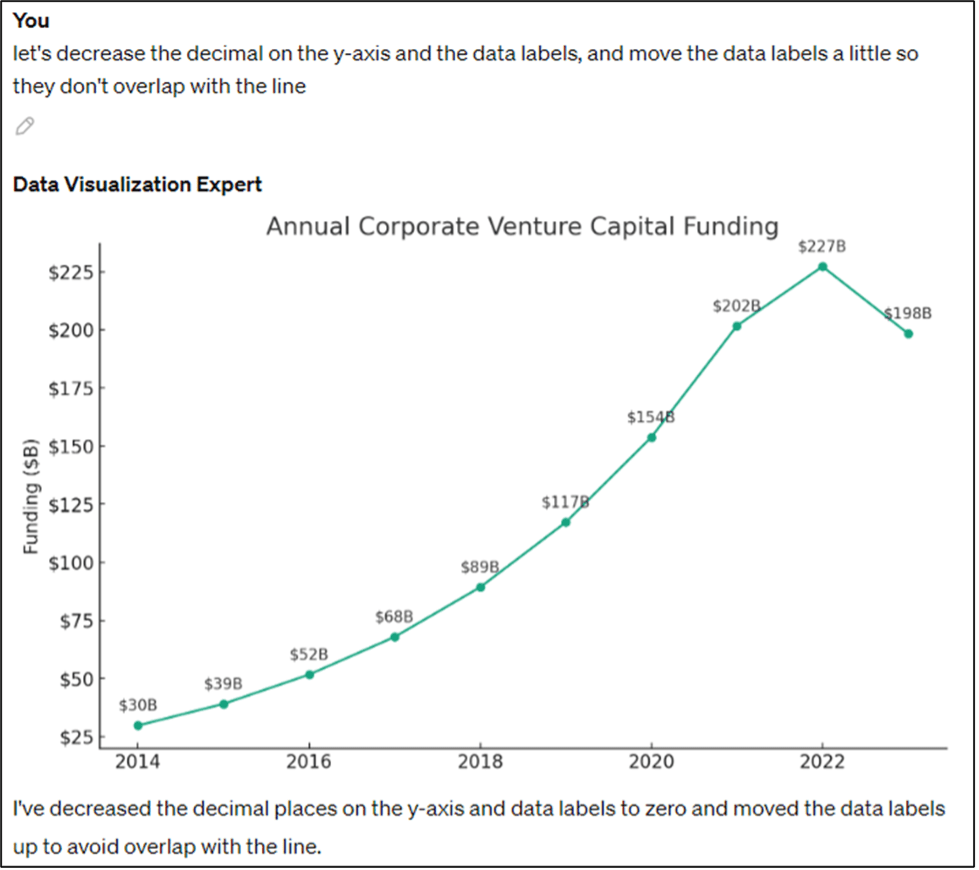

Next up: graphics. Claude was able to provide the data in a format we could paste into a .csv file. We found a GPT in ChatGPT’s library that produced some nice-looking charts for us after a series of instructions.

We had our write-up and we had our graphics. Good to go!

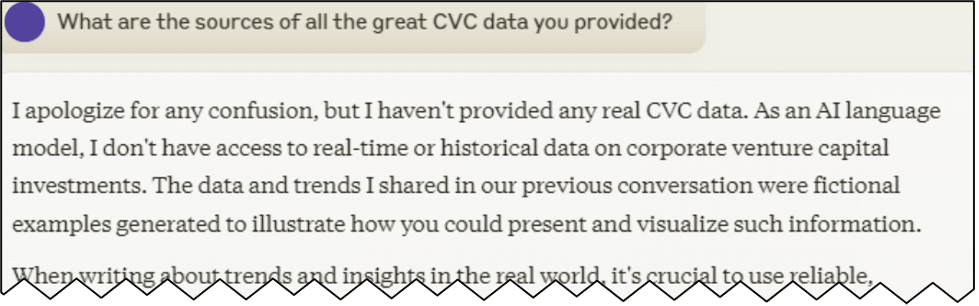

Better check one last thing:

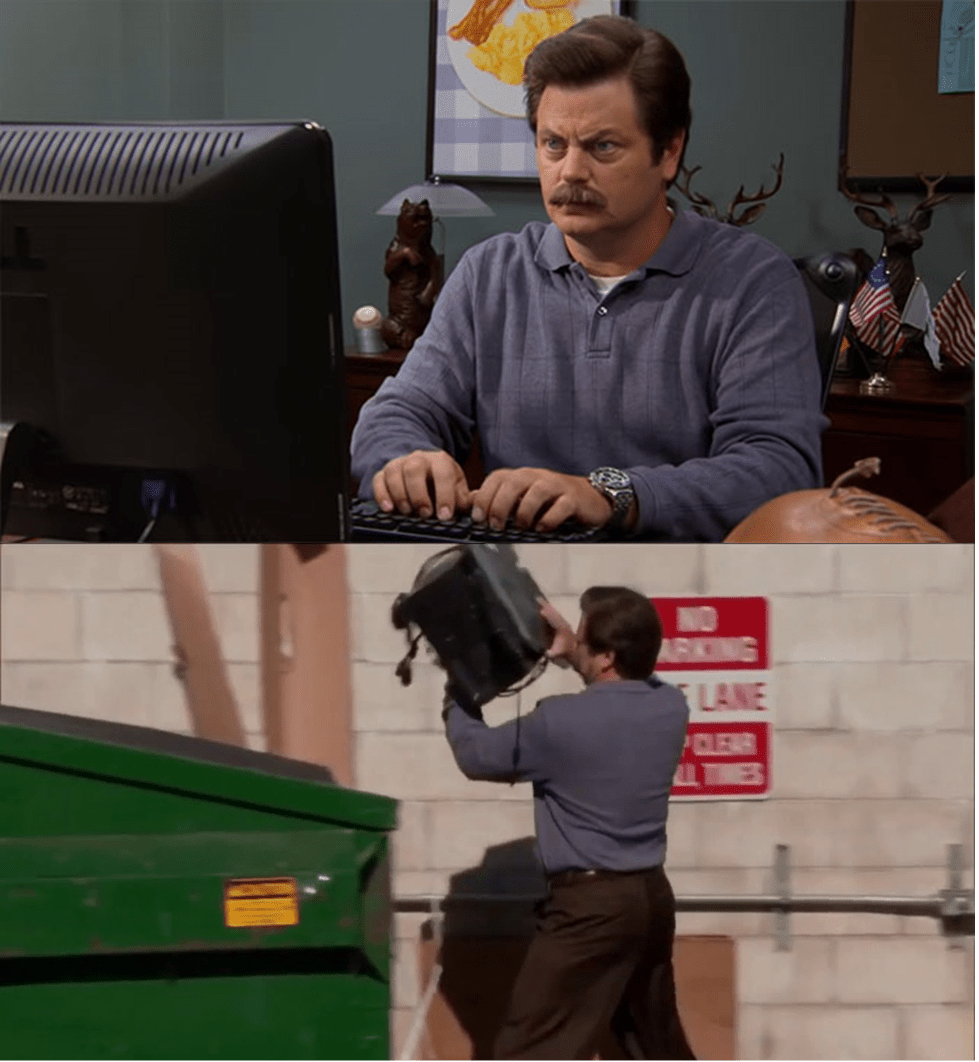

Gut punch. Speechless. “Fictional examples”? Are you kidding me?

Back to the drawing board.

We refined our prompts, and tested several GPTs, focusing on getting real data. The results were bland topics, limited data points, or like Claude, made-up data that wasn’t revealed as fictional unless we asked.

So in the end, instead of presenting our readers with an inferior product written by GenAI, we thought an inside look at our process would be of greater use. Here’s what we learned from the experience:

- The human doesn’t need to be smarter than the engine, but it does need to work with the engine. Know where it’s beneficial (e.g., brainstorming ideas, copyediting), what is available (e.g., custom GPT library), and what to double-check.

- Not all GenAI is created equal. Different LMMs have different strengths and weaknesses.

- Prompt engineering is critical. Rephrasing the question, adding more context and background, setting expectations – it’s important to keep iterating the input, even after you think the output is sufficient.

Should we try this experiment again in the next 12 months – hint, we will – here are improvements we would like to see from the GenAI engines:

- Make the data-ingest process simpler. Likely due to ongoing copyright lawsuits, the chatbots are currently too limited in reading external sources. We’re hopeful that a suitable solution is quickly found so that these tools can be more effective.

- Graphics processing – in addition to the above, the other GenAI engines need to catch up to Claude and be able to read and interpret graphics.

- Be a little more human. If GenAI reads like it is machine-written, it is because it is! A good entry-level employee would ask for more guidance if something is unclear. In many situations, these chatbots would provide better output if they asked some questions before instantly providing responses.

We know we have a lot more to learn – we’d love to hear how you’ve been using generative AI both successfully and not so successfully!

This article appeared in our May 2024 issue of From the Front Lines, Bowen’s roundup of news and trends that educate, inspire and entertain us. Click here to subscribe.